Interdisciplinary research is critical to solving most of our current environmental crises and human problems. It is particularly relevant to ecology (e.g. landscape ecology, environmental humanities, and the ecosystem services (ES) concept). This volume of the journal Ecosystems from 1999 has some great articles on the issue.

One of the biggest challenges involved in interdisciplinary research is the influence of disciplinary silos on science research and communication.

…the public is interested in the big picture painted by science, and that picture is rarely painted by a single discipline. We find, therefore, that communicating interdisciplinary results to the public is generally easier than communicating disciplinary results.

– Daily & Ehrlich (1999)

To non-scientists, interdisciplinary research makes sense, because Life is interdisciplinary. But communicating interdisciplinary results within those disciplines can be a lot harder. Every scientific discipline has its own approach to concepts, methodology, analysis and research generally. That’s what maintains knowledge diversity within ‘science’. But it can cause misunderstanding between disciplines, when we forget that other kinds of scientists may do science slightly differently to what is the ‘norm’ in our own field.

Yeasts

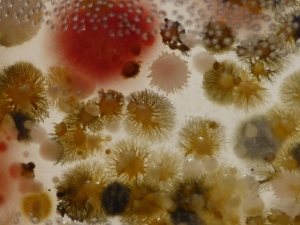

A few months ago, I attempted something radical. I trialled a pilot study of environmental yeast collection from insects. I was already working on a project about insect-mediated ES in apple orchards, and I knew that some insects carry yeasts and microbes on their bodies. So I wondered if there was potential for flying insects to carry ‘beneficial’ yeasts that help break down the discarded apple fruit after harvest, thus providing an ES to growers.

Now (here’s the radical part) I have absolutely zero background in microbiology and my experience with yeasts is limited to inhaling a good red wine. However, because I’m based at a small university campus, our ecology laboratory facilities are shared with the community health researchers. Bonus! It seemed possible that I could do this, because surely someone from the health labs could help me out with the basics.

I already had the field sites lined up, so I only needed to buy some agar plates to run the experiment. My plan was to try and catch insects in the field (mainly bees and flies), trap them in the plate so they walked around on it for some period of time, and then compare those plates with swabs from decaying fruit. Sounds easy, right? Science is never easy.

Funnily, the biggest obstacle I encountered throughout the process was not my own lack of expertise, but the opposition I received from the health staff. Some of them were actively against helping me, supposedly because I had no knowledge of their discipline (the implication being that I was wasting everyone’s time). I managed to get access to an incubator to culture the plates, but other than that I was on my own. And because I didn’t know what I was doing, I gave up.

Financially and logistically, this failed pilot study didn’t impact the rest of my work, so I just counted my losses and moved on. But it made me realise how hard it is, especially for early career researchers like myself, to try and get interdisciplinary projects off the ground.

Neuroscience

This week, another experience brought the same point home. Recently, a paper by Gregory Bratman et al. was published in PNAS on how nature walks may improve mental well-being by helping to reduce rumination (dwelling compulsively on negative thoughts), a cognitive pattern that increases risk of depression and anxiety-related mental illness. I didn’t read the paper, but the findings were commonsense, and I knew from personal experience that the effect exists. I also noted that one of the co-authors, Gretchen Daily, was an ecologist who literally wrote the book on ecosystem services, and I thought it was neat that ecologists and neuroscientists were collaborating to develop a new concept that might help nature conservation, ‘psychological ecosystem services’.

Yesterday morning, I was surprised to see a tweet claiming that the research was ‘dodgy’. I questioned why, and was directed to this blog by Micah Allen, a neuroscience post-doc researcher. It’s a bit nasty, but some of his points appeared reasonable, when taken out of context in a general science sort of way. So I went back and read the whole paper (bearing in mind that I am not a neuroscientist so I didn’t follow the jargon)…and I didn’t have a major problem with the study. Of course I didn’t recognise any of the neuro researchers, but a number of respected ecologists were involved in the paper: Gretchen Daily is Bratman’s PhD co-supervisor; and another eminent ecologist (Paul Ehrlich) and a couple of well-known conservation scientists (Peter Kareiva, Heather Tallis) were listed in the acknowledgements.

Was I missing something? According to his website, Micah Allen (the blog author) is a Post-doctoral Fellow at UCL Institute of Cognitive Neuroscience. He has an impressive CV and 14,700 Twitter followers. His tweet about the blog post was shared 164 times, including by some high-profile science writers and bloggers (therefore, potentially reaching a much wider non-scientific audience).

To a non-neuroscientist like myself, it seemed that this guy must know what he was talking about. Yet, many of the co-authors and named reviewers of this paper also appeared to know what they were talking about, as they were experienced and award-winning researchers and field-leaders in neuroscience or psychology (here, here, here, here, here). (And here’s another neuroscientist’s more positive view on the paper). I was confused.

According to Bratman’s webpage, his interdisciplinary PhD research is using the science, concepts and methods of psychology, neuroscience and ecology to define and study ‘psychological ES’. This is a new concept (with an old history, of course) that was introduced back in 2012 by Bratman, Hamilton & Daily in this literature review. The point being, that no one appears to have worked out how to test this concept neurologically, so Bratman et al. are having a go by combining the expertise of ecologists, neuroscientists and psychologists (see also this larger study with similar methods from the authors).

I can’t comment specifically on the technical aspects of this study, but I think Allen’s blog highlights how difficult it can be for interdisciplinary research to be accepted by peers – one of the main issues raised on the blog was that such a ‘low-effort’ pilot study by ‘non-experts’ was published in PNAS. And that the main result is only ‘marginally significant’ (p value of 0.07). That doesn’t make it shoddy research. P values are not always reliable or useful. And in ecology, a p value of 0.07 is certainly not the end of the world – there can still be a clear biological interaction without a significant p value.

Statistical machismo is not cool in any science. I think it’s great that this study included just enough analysis needed to address the very simple research aim (which was “to observe whether a 90-minute nature experience has the potential to decrease rumination”, my emphasis). Yes, it sounds like a pilot study, but who cares? This is how science advances – by putting ground-breaking research out there, even with limitations, so we can all start building on that knowledge.

And Bratman et al. don’t make any overtly causal claims in the paper, which is appropriate reporting for observational studies of small sample sizes where other unmeasured factors may be involved (see my previous post on this). And they clearly explain how much more research needs to be done to confirm these results.

Challenging disciplines

Interdisciplinary research is really hard, and it can be even harder for doctoral students. But it’s a challenge we have to accept. Sometimes, to achieve a valid coverage of all the different elements relevant to a particular interdisciplinary research goal, some compromises may need to be made on details that could perhaps be addressed more precisely by only addressing one of those elements. But, as long as those limitations are acknowledged, this doesn’t (in itself) make the study ‘dodgy’.

As scientists or science writers, making claims like this about cross-disciplinary studies doesn’t help scientific progress, and it doesn’t help public understanding of science. A better approach is to explain clearly what discipline-specific details may have been overlooked, how these details affect the overall interdisciplinary outcome, and how future studies could build on that knowledge.

© Manu Saunders 2015

There are many things wrong with the “Walk in the park” study, beyond the non-significant behavioural interaction. If you plot the the data you see that the result is clearly driven by regression to the mean (https://en.wikipedia.org/wiki/Regression_toward_the_mean).

Also authors incur in reverse inference by assuming certain cognitive process occurs because a certain brain area “lights up”, when brain areas can “light up” for a multitude of reasons. A more sensible approach would be to correlate the MRI effect size with the behavioural scores on an individual subject basis.

Finally PNAS is not intended for pilot studies. Many excellent, well-designed and finished studies are rejected from PNAS every week. The fact that this study was contributed highlights this issue; one cannot help wonder if the paper would have been accepted if not contributed by a NAS member.

LikeLiked by 2 people

Thanks for the comment. Where did you find the data to plot?

LikeLike

The means of the behavioural data are included in the study actually on page 2 in the top paragraph of the right-hand column:

“There was a simple effect of time for the nature group [t(17) = −2.69, P < 0.05, d =

0.34; mean change pre- to postwalk = −2.33, SE = 0.55; mean score prewalk = 35.39, SE = 1.60; mean score postwalk = 33.06, SE = 1.61], with decreases from pre- to postwalk. There was no such effect for the urban group (mean score prewalk = 30.11, SE = 2.61; mean score postwalk = 30.16, SE = 2.50).”

Essentially, there was a fairly large difference between groups in rumination before the walk but there was no difference after the walk (although, because of the absence of a significant interaction the difference between these differences was in itself not significant).

LikeLiked by 1 person

I agree with Enzo Tagliazucchi. The walk-in-the-park study is scientifically very poor for the reasons both Enzo and Micah Allen already pointed out. Non-significant interactions being treated as significant, the result seems to be driven by regression to the mean (baseline difference between groups disappears after treatment) and the fMRI is all about reverse inference.

The experimental design itself seems a bit bizarre as neither the “nature” nor the “urban” condition really seem very satisfactory. Certainly, given that there are no conceptual replications or other variations it would be completely impossible to rule out countless of other factors (social interaction, presence of cars, etc). Of course, that hardly matters because the behavioural effect is tiny and one would require much more data to establish that this is reliable. As such we can’t have much confidence that the effect is even real, let alone that the experiment tells us something interesting. Science isn’t about whether a result “feels right” but whether the hypothesis is sound and the data support the claims made about that. This is not the case here.

In the interest of full disclosure, Micah is a colleague and collaborator of mine. But this doesn’t change my objective view of this study and numerous other commenters agree with his evaluation. I agree with you that the tone of his post was quite aggressive. However, this is coming from somewhere: the fields of cognitive neuroscience and psychology are currently locked in tense debates and doubts over reproducibility of results and the scientific process in general. All of the issues we raised here about this walk-in-the-park study have been raised previously as warnings that they contribute to this crisis of confidence in science. The field should have moved on from publishing findings like this – certainly not in a journal some people still consider a high impact outlet. The reviewers of this study should have spotted these problems. Presumably the main reason they did not is that this was a contributed submission to PNAS circumventing an impartial, expert review process. I was under the impression that PNAS had closed down this avenue – apparently not

I do get what you’re saying about interdisciplinary work. I have heard this from several people before. I understand that publishing interdisciplinary research can have its challenges and perhaps there should be measures to improve that. However, it can not be at the expense of scientific quality.

LikeLike

Thanks for your comment Sam.The aim of this post was to start a discussion about the challenges of interdisciplinary research, in particular the challenges faced from ‘pure’ disciplines that speak different ‘languages’. It’s unfortunate that the comments have become caught up over this single paper. As I said in the blog, I’m an ecologist, working on ecosystem services and interdisciplinary research, so I can’t/won’t comment on the neuro aspects of this paper. I’m confused about your statement that this was a “contributed submission to PNAS circumventing an impartial, expert review process”. Are you claiming this paper didn’t undergo impartial peer review? How do you know this?

I understand you are concerned about disreputable science and reproducibility – every scientist I know is concerned about that, and this concern is not unique to the field of neuroscience. But ‘dodgy’ is a very strong word, implying scientific misconduct – neither interdisciplinary studies, nor pilot studies, are inherently ‘dodgy’ just because they don’t conform to what may be the methods/data ‘norm’ for a ‘pure’ single-discipline paper. Interdisciplinary research is critical to addressing most of our environmental and human problems, as I discuss in the post, so I think it is more damaging to science as a whole to cast aspersions on these kind of studies without clear proof of said ‘dodginess’.

LikeLike

We probably have different interpretations of ‘dodgy’. I didn’t mean it in the sense that it might be fraudulent or necessarily due to deliberate misconduct. That’s a strong allegation to make and never something I would say without having good reason to believe so. Rather I think this is most likely a spurious result and potentially due to unintentional p-hacking (or to put it more poetically, the authors may have been “wandering in the garden of forking paths”: http://www.stat.columbia.edu/~gelman/research/unpublished/p_hacking.pdf). Either way, I don’t think this has anything to do with being interdisciplinary – in fact, as discussed by Tim below, the interdisciplinary nature of it may have helped getting this into a high-impact journal.

Regarding the ‘contributed submission’: this is a way to submit manuscripts that to my knowledge is unique to PNAS. You find a member of the National Academy of Sciences who acts as editor who contributes the manuscript and gets it through with potentially very lax peer review. This is not to say that all such papers in PNAS are necessarily reviewed poorly but it certainly opens up the option for substantial nepotism. When I look at publications in that journal I certainly get the impression that the quality of contributed submissions is well below those of direct submissions (although I generally find papers in PNAS in cognitive neuroscience to be of questionable quality). The submission guidelines are described here: http://www.pnas.org/site/authors/guidelines.xhtml

LikeLike

In my view the key point about this paper is that it comes from authors with an excellent track record, and it is an interesting piece of inter-disciplinary work – as Manu Saunders points out – but that despite this, the results suffer from a very simple but rather important technical flaw, as Micah pointed out.

It looks so promising from a distance, the narrative is compelling, but only when you look into the details of the results does it run aground.

LikeLike

I think you have it exactly backwards: challenge to this paper is not because it is inter-disciplinary. In fact I suspect that the reason it was published in such a high impact journal was because it is inter-disciplinary and, as you mention, intuitive and consistent with many people’s experience. There is nothing wrong with reporting weak/insignificant results (in fact I think it is something of a moral obligation), but if those weak/insignificant findings are the core of the paper, you’ve built your house on sand. It is almost certain this paper would not have been published in PNAS (or, perhaps anywhere) if the narrative were not so appealingly interdisciplinary. And as mentioned by previous commentators, the field of human neuroimaging is flooded with these kind of “sexy, but very unlikely to replicate” findings.

LikeLike

You make a very good point there too. That’s probably completely right. It has this high impact whiff precisely because of its interdisciplinary nature.

LikeLike

Thanks for the comment Tim. I agree with you, there is nothing wrong with publishing weak/insig results – in fact, I think science as a whole would benefit greatly if there were more of it, especially in high-impact journals. I’m confused about your comment that the paper was ‘almost certainly’ only published in PNAS because it was interdisciplinary – is that a bad thing? (also see my comment to Sam above).

LikeLike